By: Jon Farrow

25 Oct, 2018

Researchers from two different CIFAR programs collaborate to show fruit flies can do more than previously thought possible.

Despite the simplicity of their visual system, fruit flies are able to reliably distinguish between individuals based on sight alone. This is a task that even humans who spend their whole lives studying Drosophila melanogaster struggle with. Researchers have now built a neural network that mimics the fruit fly’s visual system and can distinguish and re-identify flies. This may allow the thousands of labs worldwide that use fruit flies as a model organism to do more longitudinal work, looking at how individual flies change over time. It is also more evidence that fascinating research happens at the intersection of scientific disciplines.

In a project funded by a CIFAR Catalyst grant, researchers at the University of Guelph and the University of Toronto Mississauga combined expertise in fruit fly biology with machine learning to build a biologically-based algorithm that churns through low-resolution videos of fruit flies in order to test whether it is physically possible for a system with such constraints to accomplish such a difficult task.

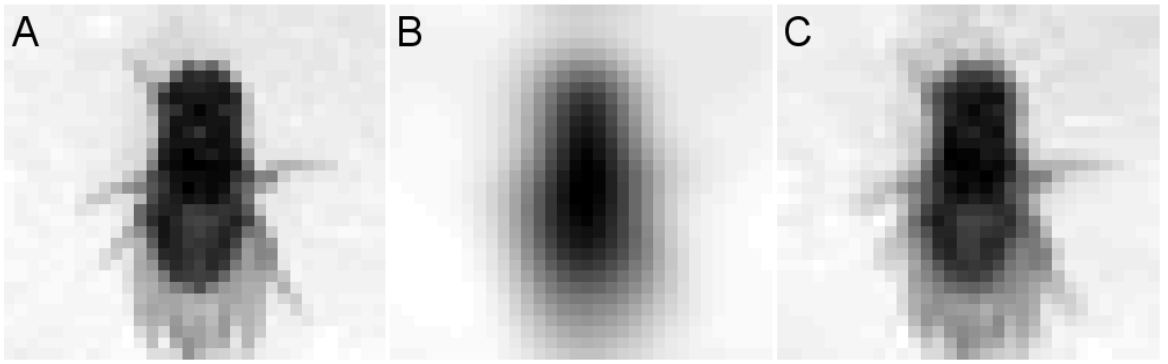

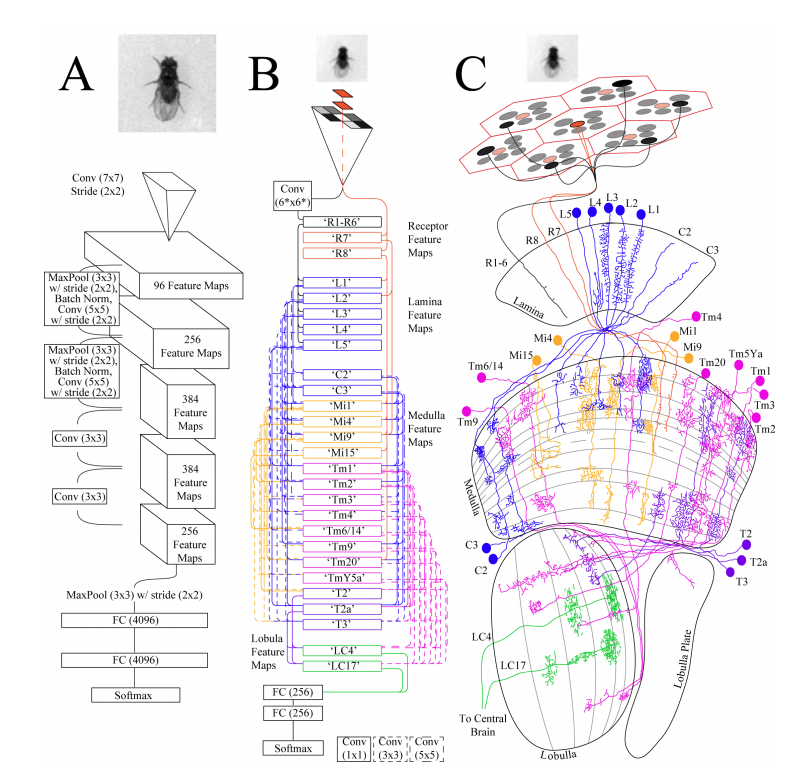

Fruit flies have small compound eyes that take in a limited amount of visual information, an estimated 29 units squared (Fig. 1A). The traditional view has been that once the image is processed by a fruit fly, it is only able to distinguish very broad features (Fig. 1B). But a recent discovery that fruit flies boost their effective resolution with subtle biological tricks (Fig. 1C) has led researchers to believe that vision could contribute significantly to the social lives of flies. This, combined with the discovery that the structure of their visual system looks a lot like a Deep Convolutional Network (DCN), led the team to ask: “can we model a fly brain that can identify individuals?”

Fig 1. How blurry is a fly’s vision? A) Ideal fruit fly input B) Traditional view C) Updated view

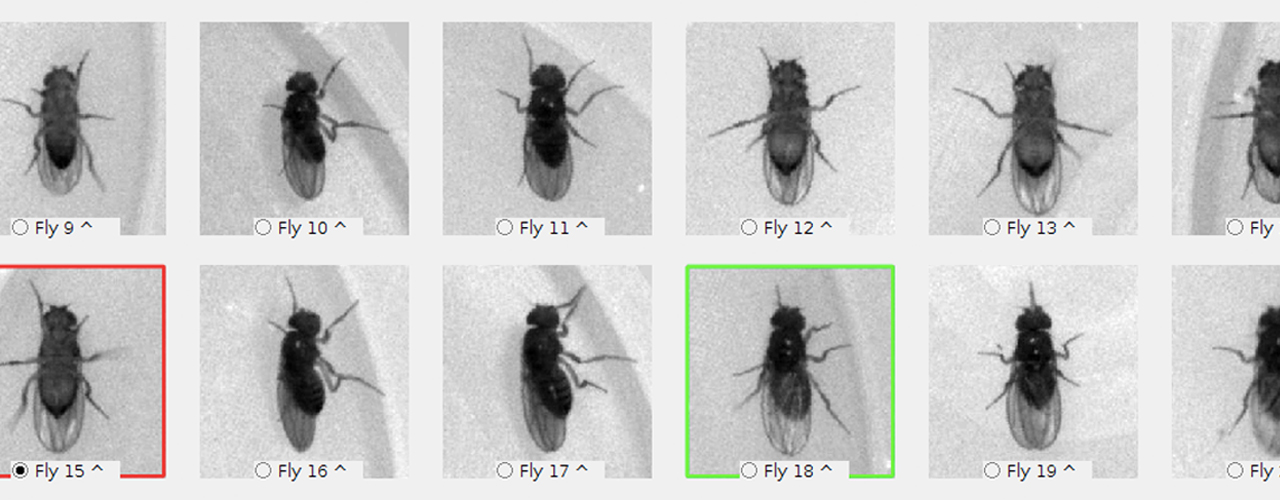

Their computer program has the same theoretical input and processing ability as a fruit fly and was trained on video of a fly over two days. It was then able to reliably identify the same fly on the third day with an F1 score (a measure that combines precision and recall) of 0.75. Impressively, this is only slightly worse than scores of 0.85 and 0.83 for algorithms without the constraints of fly-brain biology. For comparison, when given the easier task of matching the ‘mugshot’ of a fly to a field of 20 others, experienced human fly biologists only managed a score of 0.08. Random chance would score 0.05.

Fig 2: The machine and the fly A) Modern DCN machine learning algorithm B) Machine learning algorithm based on fly biology C) Connections in the fruit fly visual system

Jon Schneider, a postdoctoral fellow who shares his time between Joel Levine and Graham Taylor’s labs and is the first author of the paper being published in PLOS ONE this week, says this study points to “the tantalizing possibility that rather than just being able to recognize broad categories, fruit flies are able to distinguish individuals. So when one lands next to another, it’s “Hi Bob, Hey Alice””.

The collaboration was born out of a cross-program CIFAR meeting that included researchers from both the Learning in Machines & Brains and Child & Brain Development programs. It was there that Joel and Graham started talking about how machine learning might be harnessed to improve fly tracking, a notoriously difficult problem for biologists.

It wasn’t all smooth sailing, though. “I had this naive idea about what unsupervised learning means which led me and Jon Schneider to brainstorm some really cool experiments based on what Graham had done. And it was only much later we found out that it was ridiculous because our idea of what unsupervised learning was wrong. But that’s a beautiful thing. Because those are the growing pains you need to be able to get those different areas to speak to one another other, and once they do, [amazing things] happen”.

The approach of pairing deep learning models with nervous systems is incredibly rich. It can tell us about the models, about how neurons communicate with each other, and it can tell us about the whole animal. That’s sort of mind blowing. And it’s unexplored territory.

Graham Taylor, a machine learning specialist and CIFAR Azrieli Global Scholar in the Learning in Machines and Brains program, was excited by the prospect of beating humans at a visual task. “A lot of Deep Neural Network applications try to replicate and automate human abilities like facial recognition, natural language processing, or song identification. But rarely do they go beyond human capacity. So it’s exciting to find a problem where algorithms can outperform humans.”

The experiments took place in the University of Toronto, Mississauga lab of Joel Levine, a senior fellow in the CIFAR Child & Brain Development program. He has high hopes for the future of research like this. “The approach of pairing deep learning models with nervous systems is incredibly rich. It can tell us about the models, about how neurons communicate with each other, and it can tell us about the whole animal. That’s sort of mind blowing. And it’s unexplored territory.”

All of the researchers involved in the study, including Nihal Murali, an undergraduate student on exchange from India, found crossing between biology and computer science exhilarating. Schneider summed up what it was like working between disciplines: “Projects like this are a perfect arena for neurobiologists and machine learning researchers to work together to uncover the fundamentals of how any system – biological or otherwise – learns and processes information.”