By: Jon Farrow

20 Nov, 2018

Our world might soon be filled with autonomous agents, so we should probably program them with moral compasses. But whose morality do we choose as the model?

Imagine driving down a road with your family in the car, when all of a sudden a cat jumps in front of the vehicle. Do you swerve to avoid the cat, risking a crash into a wall that will endanger you and your family, or do you keep driving straight? What if instead of a cat, it was a child? Or an elderly person? How does that change your moral calculation?

This is the essence of a famous philosophical dilemma called the trolley problem, which will soon become more than just a thought experiment. Many argue that, over the coming years as we relinquish responsibilities like driving to machines, we must program self-driving cars to weigh these considerations.

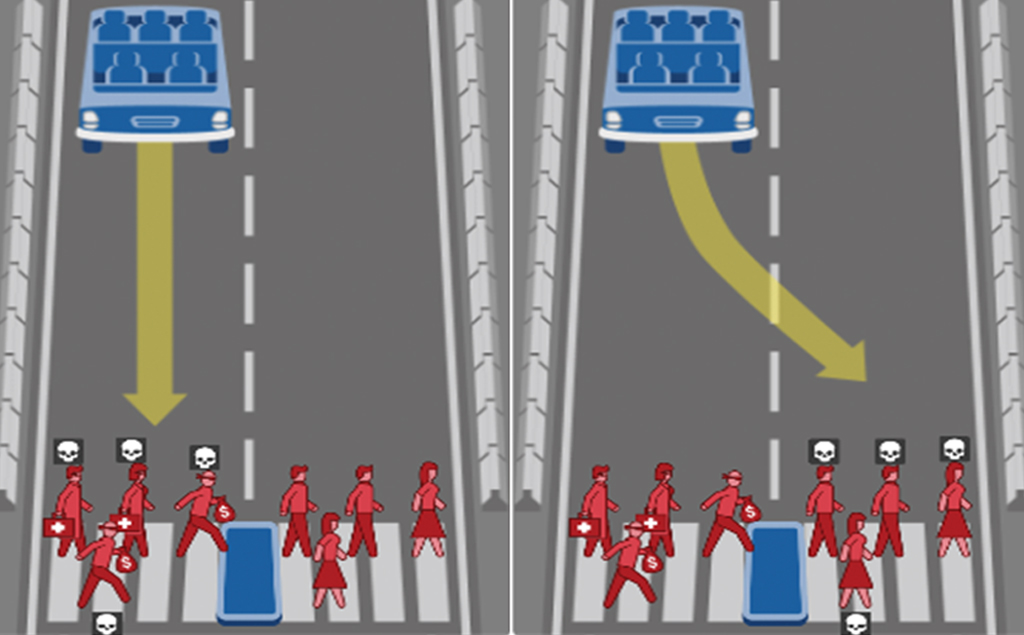

In order to understand who to save, researchers at MIT built a website called the Moral Machine that has been presenting millions of people with permutations of the trolley problem for the past two years. Two dogs and a bank robber in the car versus a jaywalking woman and a baby. Four female athletes versus four men crossing legally. The possibilities are nearly endless.

After 40 million responses in nine languages from 233 countries, they published results in Nature that reveal the average person tends towards saving the young, the many and the human. This may seem like common sense, but a deeper dive into the data also revealed striking cultural differences. There is a stronger preference for the elderly in Eastern countries like China and Japan, for example. Southern countries, like those in Latin America, tend towards sparing higher-status individuals. Western countries like Canada and the US prefer inaction to action, resisting self-driving vehicles going out of their way to hit pedestrians, no matter who is in the car.

With a dataset this large, there are countless ways to interpret the results, so to get a better idea of what it all means, we spoke with Joe Henrich, one of the paper’s authors. Henrich is a professor in Harvard’s Department of Human Evolutionary Biology and a CIFAR senior fellow in the Institutions, Organizations & Growth program.

We caught up with Professor Henrich over the phone a few days after the paper was published in Nature.

What was your involvement in the project?

I was mostly involved in figuring out how to analyze the data and understand the variation. Iyad Rahwan and his graduate student Edmond Awad did most of the heavy lifting. The rest of us were involved in various stages of the analysis and finding a kind of big-picture interpretation and analytical approach. Azim Shariff, another one of the authors, is a former student of mine, and so as the data was coming in, he approached me for advice on how to analyze it. So I started telling him what I thought. And then after that conversation they invited me to be an author and continue to advise.

Why are these results important?

When you look at the data, it should maybe make you question all kinds of policy decisions, because if people in different societies have fairly different moralities in terms of what they want to prioritize, the policy choices one would make are going to look quite different. Whether you prioritize the elderly, or the influence of status, or the importance of the majority, or all sorts of other social factors.

We aren’t trying to prescribe specific policy measures, but it’s got to be useful for policymakers to at least know how the rank and file, the people in these different countries, make choices about how they think the trade-off should go. Now the policymakers, they decide. But if that’s going to violate what most of the populous wants, you want to know that.

Were you surprised by any of the results?

There had been previous claims that there wasn’t very much cultural variation in these trolley-problem-type dilemmas, that people across the world would be relatively consistent in their answers. But from a couple of one-off experiments that I’d done and experimental work from others, I suspected there would be a lot of cultural variation. So what really intrigued me about the results was that there’s just a huge amount of cultural variation in how people balance these different trade-offs, like sparing the elderly or sparing passengers, sparing males or females.

One of the interesting things that I think was kind of puzzling is the question of doing the most good for the largest number of people – utilitarianism. Many people subscribe to a utilitarian worldview. But what we found is that the more utilitarian countries were those that were more individualistic. In other words, utilitarianism is a philosophy you think of once you’re sufficiently individualistic. Philosophers tend to think of it as coming out of the realm of pure thought, but actually you can think of it as a product of an individualistic psychology.

The other counter-intuitive result is the following: In general there’s a bias to favour women, so you don’t tell the car to swerve into women or crash the car with the woman in it. But in places where there’s greater gender inequality, there’s more favouritism towards women. By increasing gender equality and increasing the value of women in society, you also make people more likely to say ‘Well, the car should treat men and women more equally.’ So you actually hurt women more.

Given these cultural differences, who gets to program ethics into self-driving cars?

That’s a big open question. Because you could have each purchaser program their own system. Then presumably they would buy cars that always protect the passenger. But you could also imagine having regulations imposed. But you have to decide at what level are these are written. Do we want global standards? Or do we have national standards? Local standards? This study showed there’s variation between countries, but there’s also lots of variation within countries. That’s actually one of our current efforts. We’re looking at India, for example, and exploring the regional variation of these differences through countries.

Are you having these sorts of conversations in the Institutions, Organizations & Growth meetings?

Well, I think those conversations have begun. And this study is a good starting point. As a group we are definitely interested in the effects of things like robots and artificial intelligence on the world. I haven’t seen a particular discussion of ethics or how to program ethics in the artificial intelligence, but there are a number of members of the group interested in that and I can see it going in that direction.

What’s next for this project?

The data is really rich and this was really just our first hack at it. We just wanted to get the study out there and spark the debate. But now I have these economist post-docs in my lab and we’re going through it in much more detail. For example, we have the location of the individual when they answered the questions, so we can analyze things like ecological effects or cultural clusters within countries. And we can actually look at the effects of income at the very local level using satellite imagery. That sounds crazy, but satellite imagery at night can tell you about illumination for every spot on the globe. And illumination correlates strongly with economic growth and output. So that means we can assign for each region within a country a measure of their GDP, and then we’ll be able to look at things like income and how that relates to answers on the survey.

This interview was edited for length and clarity.

CIFAR is a registered charitable organization supported by the governments of Canada and Quebec, as well as foundations, individuals, corporations and Canadian and international partner organizations.