By: Justine Brooks

14 May, 2024

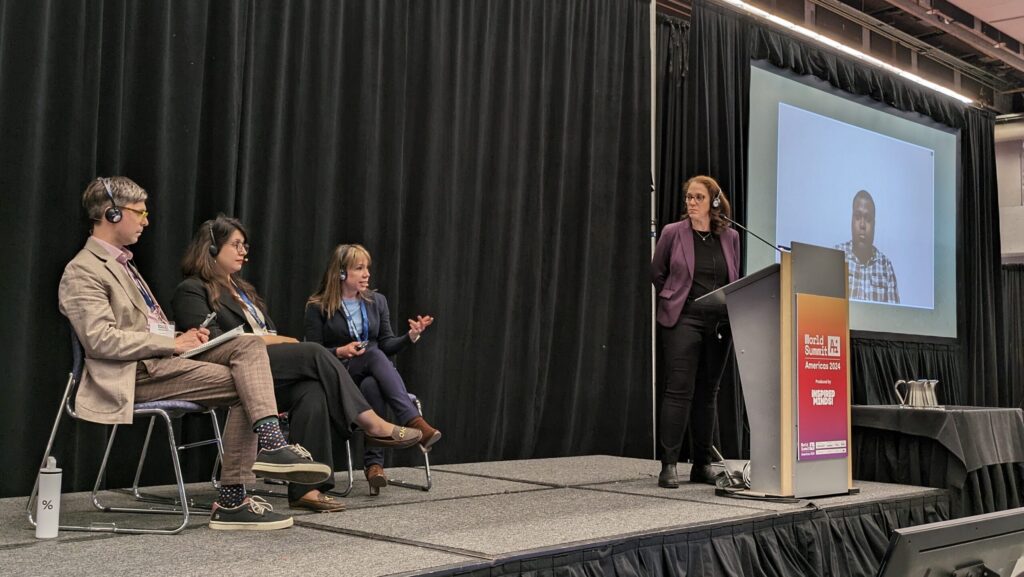

AI has been heralded by media around the world as the most influential technology since the printing press. But an important question looms: influential for whom? At this year’s World Summit AI Americas in Montréal, a panel moderated by Elissa Strome, Executive Director of the Pan-Canadian AI Strategy at CIFAR, featured experts who are grappling with just this question.

Titled “AI that serves the world,” the panel discussion aired ways in which these CIFAR-affiliated research leaders are advancing AI systems to serve communities historically ignored or harmed by technologies.

“CIFAR’s central ethos, as affirmed by our recently-refreshed strategy, is to convene and mobilize the world’s most brilliant people across disciplines and at all career stages to advance transformative knowledge and solve humanity’s biggest problems, together,” comments Strome, reflecting on the theme of the panel discussion. “That last word, ‘together’ is vitally important. If we want to improve AI and realize its potential for good, we need to have these conversations with wider groups of people. This includes people whose lived experiences and needs have not always been valued or served by science and technology.”

The panelists spoke about the ways in which AI has failed some communities — reinforcing existing prejudices or excluding them all together — and how they’re working to address these oversights.

Inclusivity is key to any solution, said Golnoosh Farnadi, a Canada CIFAR AI Chair at Mila who is an assistant professor at Université de Montréal. “AI is a socio-technical challenge. We can’t treat it as just a technical one: it must be connected to society. Rather than building a system for people, those affected must be involved in the pipeline from the start.”

David Ifeoluwa Adelani, a newly-appointed Canada CIFAR Chair at Mila who will start as an assistant professor at McGill University in August, spoke about the work his group is undertaking to develop machine learning models for under-resourced languages, such as African, Latin-American and Indigenous languages. “Large language models such as ChatGPT present an opportunity to preserve minority languages, but it is important that this work is led or involves those who are part of the communities they seek to serve. If we can get this right, then I see a lot of opportunity for language technologies such as machine recognition and speech recognition to be beneficial for these communities with minimal resources, since current models are more data efficient and can be used even without extensive content from sources such as the web.”

Also joining the panel was Colin Clark, Director of Design and Technology at the Institute for Research and Development on Inclusion and Society. Clark co-leads the inaugural CIFAR Solutions Network called Data Communities for Inclusion, which develops resources that help under-served cooperative communities adopt AI tools for societal and economic benefit in low-resource settings. In considering contemporary discussions of hypothetical risks from AI, Clark noted, “While it is important to think about the future of AI, it is also important to consider the impact AI is already having today on marginalized communities. We must move away from designing systems for marginalized people, and instead, adopt a community-led design practice that involves their diverse experience and knowledge from the start.”

Catherine Régis, a professor at Université de Montréal who is a Canada CIFAR AI Chair at Mila, pointed out the stark gaps in current regulatory practices. “Self-regulation is not enough — we must have more transparency and oversight of those developing AI systems. We must also evaluate the risks associated with a system before they launch, using tools such as impact assessments.”

“AI has the potential to do a lot of good in the world, but it can also reinforce and amplify existing issues if we don’t take care,” says Strome. “By continuing to support the work of leading researchers such as these, the Pan-Canadian AI Strategy at CIFAR is setting the stage for the future of global AI safety, fairness and inclusion.”